Point of View: The science of cinema

I am always amused when people tell me that their ears and eyes would not be good enough to do my job as a reviewer. They are convinced that they wouldn’t hear the difference between two pairs of speakers, or see the variation in picture quality from one HD screen to another. While I am the first to highlight my 24-carat golden ears and eagle-eyed vision to anyone requiring AV equipment testing, the reality is a little different. My physical sensory faculties are really no better than most other normal healthy people, although in terms of reviewing hours they have been round the block a bit.

The trouble is one of perception, because for most people CD sound and budget speakers are quite good enough and Full HD is simply great. So would they want to upgrade to a 4K picture with high-res audio? Meh, they reckon they wouldn’t notice the difference.

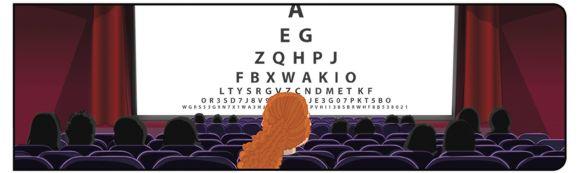

They might just have a point you know. Will a 4K picture actually look any better on a normal-sized TV than standard 1080p? By definition, someone with normal 20/20 vision is capable of resolving lines spaced 1/16th of an inch apart at 20 feet, or 1.75mm at six metres in new money. The maximum human visual acuity using this scale is about 20/10 vision, or the ability to differentiate 0.87mm lines at six metres. Putting that into standard TV-watching distance of four metres, you can say that someone with really good eyesight can differentiate lines, or for our purposes, pixels, of 0.58mm.

Now then (stay awake at the back), a 50in screen with a 1920 x 1080 resolution has pixels pretty much exactly 0.58mm x 0.58mm in size. So any increase in resolution on a 50in screen will reduce individual pixel dimensions to a size below the threshold that the human eye can resolve at a distance of four metres. So for screens of 50in and below, HD formats like 4K x 2K are pointless and our doubters really won’t see the difference.

The same sort of maths could be applied to ultra-high res audio if there was any way of reliably putting performance metrics on human hearing. While we can all hear the difference between uncompressed BD mixes and a 128kbps MP3, what about higher resolutions? Can we distinguish between recordings made and reproduced at 96kHz/24bit from one mastered and decoded at 192kHz/24bit? Arguably the human ear, with its crude organic wobbly bits, is not even close to being capable of distinguishing the two.

But in the real world...So have we reached a biological impasse beyond which better technology will no longer yield improvement, unless, of course, you are an eagle or a bat? While the maths would certainly suggest that is the case, the reality is not so cut-and-dried. For example, on a 32in screen it is easy to see the difference between a 720p and 1080p picture despite the definition of 20/20 vision, or even perfect 20/10 vision, indicating that we can not. Loudspeakers with super-tweeters, with frequencies that extend to 40kHz, definitely sound different to speakers with a 20kHz cut-off. Given that most boffins say human hearing only extends to 20kHz the maths and the reality are again at odds. Clearly we complex human beings are capable of resolving audio and visual input to a level of detail that utterly belies our current basic measurements of sight and hearing ability.

The good news here is that everyone’s senses are more than capable of enjoying the benefits of high-resolution audio and seeing the additional detail of a 4K picture. So to all those people who believe they could not do my job due to their inferior auditory and visual senses, you are most definitely wrong because any old chump could do it. Oh hang on…

Do you trust your eyes and ears when it comes to AV?

Let us know: email [email protected] or log-on and add your comment below

This column first appeared in the June 2012 issue of Home Cinema Choice

|

Home Cinema Choice #351 is on sale now, featuring: Samsung S95D flagship OLED TV; Ascendo loudspeakers; Pioneer VSA-LX805 AV receiver; UST projector roundup; 2024’s summer movies; Conan 4K; and more

|